World's First Synthetic Gun Detection Dataset

Improvis is releasing the world’s first synthetic gun detection dataset, which was made for edgecase.ai. The Edgecase.ai Synthetic Gun Detection Dataset is the largest open source synthetic gun detection dataset in the world.

Why Did We Do This?

We are always striving to provide value for the community. In the light of the tragedies around us, it is imperative that both enterprises and hobbyists have the data and tools necessary to detect guns in the wild.

This is why we are releasing synthetic gun detection dataset to help researchers and parties interested in developing gun detection algorithms.

Why Synthetic Data?

Gathering data to train AI applications is tough and time-consuming. It requires either taking millions of pictures at gun-rights rallies and meetups (impractical), scouring google and hoping the data you scrape is licensed under the Creative Commons (it isn’t), or trying to build your own by way of working with governmental agencies (these are definitely not shared).

What is synthetic data?

In our specific case, synthetic data is the set of the images rendered using hyper-realistic 3D models and scenes generated within a 3D environment and animated using 3D game engines like Unity or Unreal.

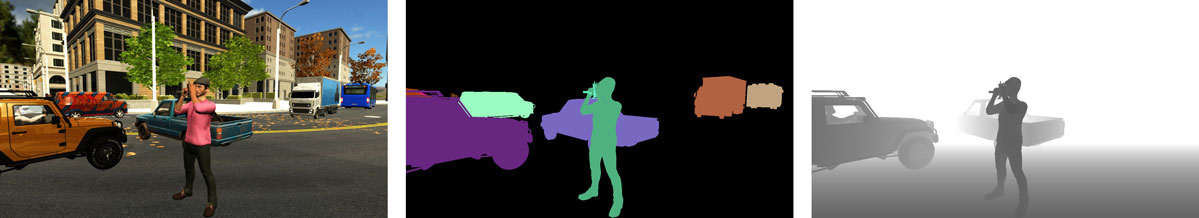

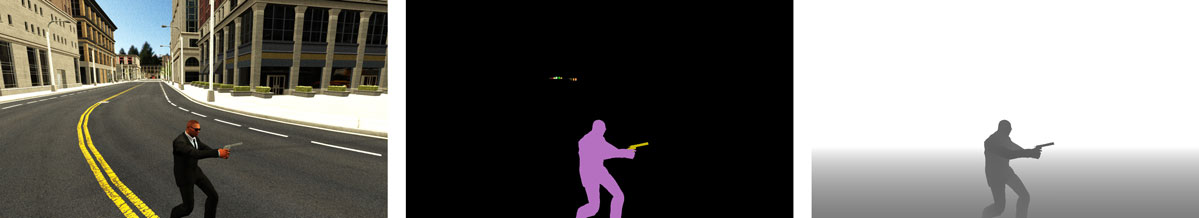

Below are examples of synthetic images and their pixel-perfect annotations/masks, a depth map as well as a demo video on how our Synthetic Data Generation Engine produces Synthetic Data.

Ground Truth or “Base Image” Semantic Mask Depth Map (16 bit)

Images Consist of: Left Column Base Image : Middle Column Semantic Mask : Right Column Depth Map

Pic 1. Samples of synthetically Generated indoor/outdoor Data

Synthetic data to the rescue!

The advantages of generating and using synthetic data include:

- Possibility to change the camera angle/ position/ field of view

- Possibility to change the object’s 3D position/ rotation/ scale/ deformation level, etc.

- Color and texture

- Indoor / outdoor lighting conditions,

- Time of the day and weather

- Noise level

- Pixel-perfect instance annotation

- Possibility to generate in-depth map and point cloud with the simulation of all the 3D sensing parameters

- Abnormal amount of the data

- Control over distribution of the amount of the objects or distribution of any of the properties of the object

- Control over obstacles and parts visibility

- Fast Post Rendered effects

The system has the ability to provide synthetically generated data that is pixel-perfect, automatically annotated/ labeled, and ready to be used as ground truth as well as train data for instant segmentation. Additionally, our system has the ability to handle animation/ pose changes of humanoids and generate a large variety of synthetic images to cover pose variations of humans. Our synthetic data generation engine also includes all the recommendations that came from Domain Randomization and Structured Domain Randomization techniques, to close the gap between performances of the real and synthetic data-based models. We are massively using distractors as well as random textures (real and synthetic) over the generated scenes to enforce the network to pay attention to targeted objects like guns. There is an automated scene generation algorithm that implements automated objects placement logic as well as an automated routing algorithm over natural movement/walking/running of the objects.

Below is an illustration of the experiment on

“Using Synthetic Data to Improve the Accuracy of the DNN Model When the Amount of Available Real Data is Limited”.

Here is How We Did it:

In order to provide a quick verification that synthetic data works, we trained the DNN model using the detectron framework and the available implementation of the Faster R-CNN architecture. Firstly, Faster R-CNN was trained on synthetic data using 90K iterations and then continued training on real data using 90K iterations; its accuracy has been compared against the model trained using pure real data with 90K iterations. The batch size used was equal to 512 and all the hyperparameters were kept equal to the default values of detectron framework. Thanks FAIR for such a great framework! (Note further hyper optimization is 100% possible, and we leave it to the research community to pursue)

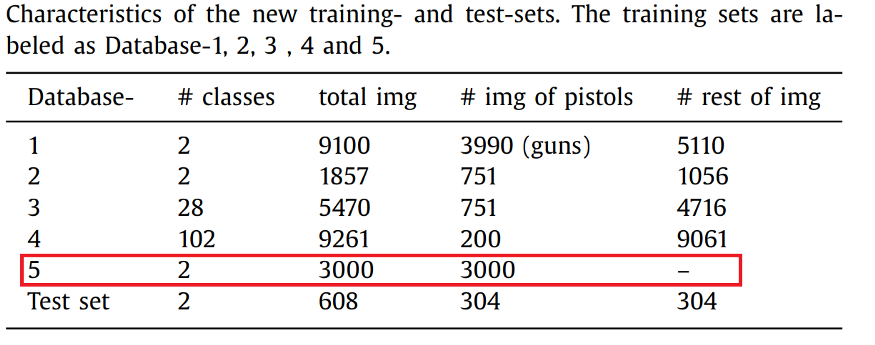

Real Gun images have been used by skilled researchers at Soft Computing and Intelligent Information System (see Table 1.)

Table 1. The Structure of the Available Gun Images

For the sake of this experiment the images of the Database-5 have been used.

Automatic Handgun Detection Alarm in Videos Using Deep Learning.

Olmos, R., Tabik, S., & Herrera, F. (2018).

Neurocomputing, 275, 66–72.

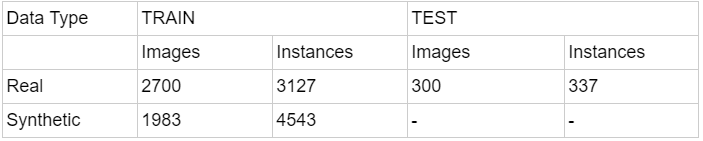

Due to the lack of annotation information of the test set, we separated 300 images as a test set from 3,000 train images (Database-5), and used it for the evaluation of the accuracy and comparison between pure real and synthetic-backed models. Table 2 presents the splitting information on the number of real and synthetic images as well as annotated instances/objects in the images. No synthetic test data was separated for testing purposes since our primary target was to analyze the behaviour of the DNN model on the real images-based test set.

Table 2. The Amount of Train/Test Images and Instances

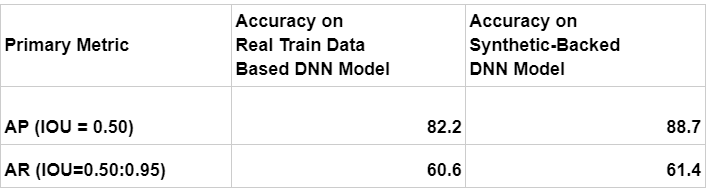

Taking into account, that for this special case it was much more important (even critical) to classify whether there was a gun object in the image, than to find out how accurately the detected bounding box fit to the real object, AP at IoU=.50 (PASCAL VOC metric) was chosen as a primary metric for accuracy measurement. As you can see in Table 3, synthetic data backed model improves the primary metric by about 6.5% versus only real images (Real Train).

Table 3. The comparison of accuracy between only real data and synthetic-backed models

During the experiments with gun detection DNN models, we noticed that the advantage of the network trained using synthetic data emphasizes increase in accuracy on images where the gun is relatively small and is in a person’s hand which is vital for this task. It may be explained by the fact that Improvis created synthetic data images for these hard-to-find cases and getting such real images in the wild is difficult. Below (Pic. 2) you can see the visual comparison of DNN models’ accuracy on the test images, and the (possibly life-saving) advantage of the synthetic-backed DNN model against a pure real one.

Results of Model Trained on Real Data vs. Synthetic Data Trained Model

Pic. 2 Visual comparison of DNN models’ accuracy Synthetic Backed vs Pure Real

We made all the data and scripts publicly available and anyone is welcome to repeat our experiments by referring to our GitHub content. All the related info is in README. Links to the test and train data sets are also there; the annotation is done according to the COCO format.

Dataset consists of:

25,000 images of indoor scenes. In human’s hand and placed images+annotations, depth-maps, semantic-masks.

10,000 images of outdoor scenes. In human’s hand.images+annotations, depth-maps, semantic-masks .

6,500 images of classified guns of 24 different types images+annotations, depth-maps, semantic-masks.

We believe this is only the beginning of the great use of synthetic data to help improve detection and classification problems for the broader community. If you have any questions or comments or need more data, please reach out to info@edgecase.ai.

Improvis Team

Improvis Team September 14, 2021

September 14, 2021